As said during the design [1], all the business logic related to the ET part will be in PowerShell. For people which may not know this language I can anticipate it is Microsoft product which can also be installed and used on Linux [2] even I’m not sure if it would make sense. This will give a more flexible and evoluted experience than the regular command line.

Start coding in PowerShell is very easy and you just need an Editor. I would suggest to use Visual Studio Code [3] which is free, light, it support a lot of languages and is also providing a debug mode which may help you a lot in your troubleshooting.

Coming back to our topic on first we need to design a way to log (on screen and on file) the actions the script is doing. To do that we’ll create an ad-hoc function named WriteLog which will print in the shell and on file what we desire.

- Screen writing will be covered by Write-Host “text to write”

- File writing will be covered by Add-content File -value “text to write “

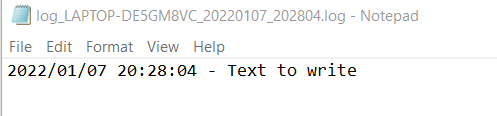

In the file output we are also adding a timestamp for each row we write.

function WriteLog

{

Param (

[Parameter(Mandatory)]

[string]$LogString

)

$Stamp = (Get-Date).toString("yyyy/MM/dd HH:mm:ss")

$LogMessage = "$Stamp - $LogString"

Add-content $LogFile -value $LogMessage

Write-Host $LogString -ForegroundColor White

}As first statement you can find the word Param: this is used to delcare the parameter for the function. In this case it is the text to log and it is marked as Mandatory since there is no log without a text to log.

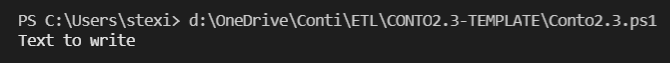

Let’s try it out then

WriteLog "Text to write"Executing the script from Commnad Line (or in the Visual Studio Code IDE) we’ll get the text we are expecting the response of the shell.

Good but not enough since we want also to write to a phisical file and to do this we need to identify the file to write and place it in the same folder where the script is located.

$myDir = Split-Path -Parent $MyInvocation.MyCommand.Path

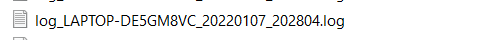

$Logfile = "$myDir\log_$($env:computername)_$((Get-Date).toString("yyyyMMdd_HHmmss")).log"In the first row we are getting the path of the folder of the script and store it in the variable . In the second line we are adding the file Log name composed as the machine name plus a timestamp in order to write a different file at each run.

Running again the script we now have a file log in the folder.

Please take note on how is simple to do a string concatenate in PowerShell using variables: $LogMessage = “$Stamp – $LogString” we are just writing the variables (starting with $) within the text. Quick and smart.

Resuming: we just created a first version of the PowerShell Script which is logging at screen and on file a text sample. You may see that to easy read it the function WriteLog is located on top of the script while it will be invoked only later in the bottom part. Thi is not mandatory, the script still works if you put the function declaration after the piece invokin it. It is just a convention.

#begin FUNCTIONS

function WriteLog

{

Param (

[Parameter(Mandatory)]

[string]$LogString

)

$Stamp = (Get-Date).toString("yyyy/MM/dd HH:mm:ss")

$LogMessage = "$Stamp - $LogString"

Add-content $LogFile -value $LogMessage

Write-Host $LogString -ForegroundColor White

}

#end FUNCTIONS

# Get the current Directory

$myDir = Split-Path -Parent $MyInvocation.MyCommand.Path

#Set the file log name

$Logfile = "$myDir\log_$($env:computername)_$((Get-Date).toString("yyyyMMdd_HHmmss")).log"

WriteLog "Text to write"You can download here [4] the zip with the script to try it on your own.

[1] https://www.beren.it/en/2022/01/07/conto2-3-the-design/

[3] https://code.visualstudio.com/download

[4] https://www.beren.it/wp-content/uploads/2022/01/CONTO2.3-01.zip